INDUSTRY 4.0⁴

INDUSTRY 4.0⁴

Unveiling the Future: A Deep Dive into AR, VR, XR, and Spatial Computing with a Focus on Apple's Vision Pro

Introduction:

In recent years, the realms of Augmented Reality (AR), Virtual Reality (VR), Extended Reality (XR), and Spatial Computing have evolved, shaping the way we interact with digital information and the physical world. This article explores the core concepts of these technologies, delving into the sensor and component intricacies of Apple's Vision Pro.

Understanding the Core Concepts:

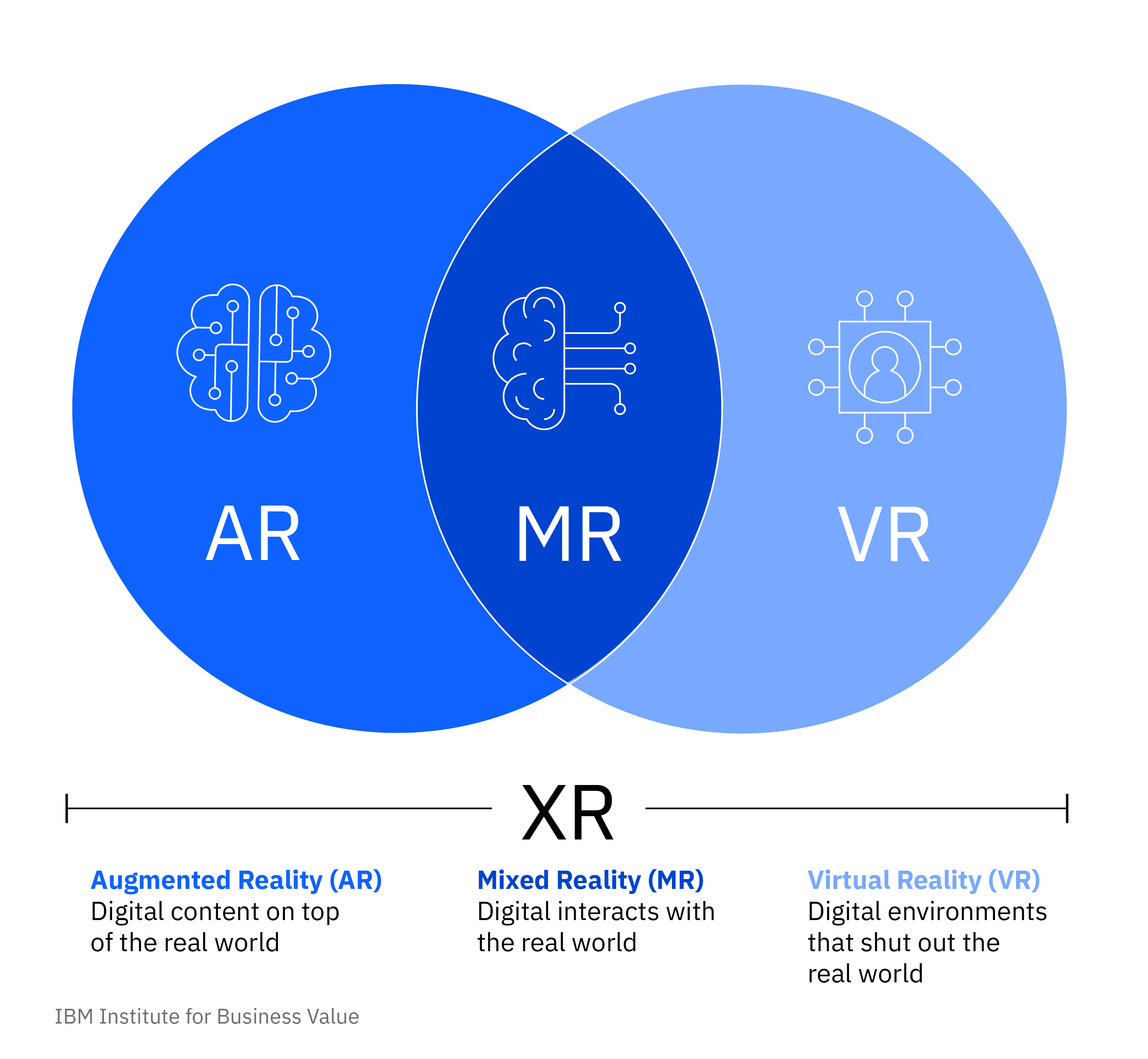

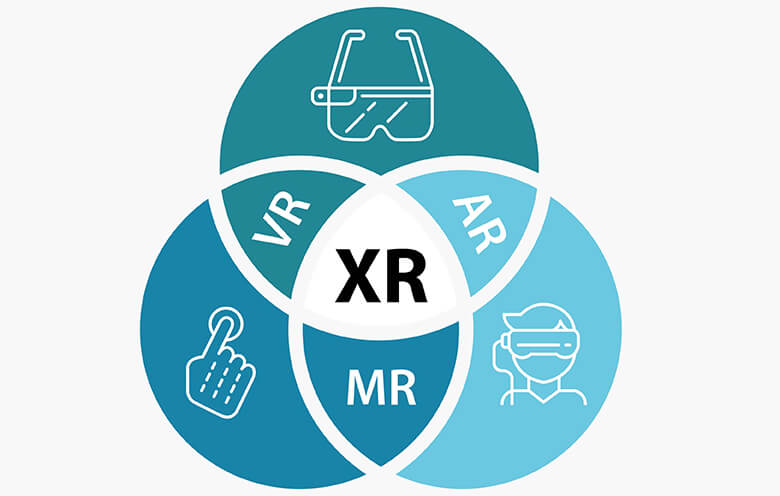

1. Augmented Reality (AR)

Definition and Basics: Overlaying digital content onto the real world.

Key Components: Cameras, sensors, and processors for real-time environment mapping.

2. Virtual Reality (VR):

Definition and Basics: Immersive digital experiences in a simulated environment.

Key Components: Head-mounted displays, motion controllers, and spatial audio systems.

3. Extended Reality (XR):

Definition and Basics: Encompasses AR, VR, and everything in between for seamless integration.

Key Components: Combines the features of AR and VR, often with spatial computing elements.

4. Spatial Computing: The use of space and location in computing to enhance user interactions.

Key Components: Depth-sensing cameras, LiDAR sensors, and advanced algorithms for spatial awareness.

Apple's Vision Pro Components

1. LiDAR Sensor:

Purpose: Measures distances by illuminating the surroundings with laser light.

Application: Enhances depth perception, crucial for AR and spatial computing.

2. Depth-sensing Cameras:

Purpose: Capture detailed depth information of the surroundings.

Application: Enables precise mapping of the environment for more realistic AR experiences.

3. Advanced Processors:

Purpose: Power-intensive computations for real-time rendering and spatial mapping.

Application: Ensures smooth and responsive AR/VR experiences on Apple devices.

4. Integration with iOS:

Purpose: Seamless integration with Apple's ecosystem for a cohesive user experience.

Application: Facilitates cross-device communication and sharing of AR experiences.

Open Source Materials for DIY Projects:

1. ARKit (iOS):

Notes: Apple's AR development framework, providing tools for creating AR applications.

Steps: Access ARKit documentation and tutorials on Apple's developer portal.

2. Unity3D with AR Foundation:

Notes: Cross-platform game engine supporting AR development through AR Foundation.

Steps: Explore Unity3D documentation and community forums for AR projects.

3. A-Frame (Web-based VR):

Notes: A web framework for building VR experiences using HTML and JavaScript.

Steps: Visit A-Frame's GitHub repository for code samples and documentation.

4. OpenCV (Computer Vision):

Notes: Open-source computer vision library with applications in AR and spatial computing.

Steps: Access OpenCV documentation and community resources for projects.

As we traverse the exciting landscape of AR, VR, XR, and spatial computing, Apple's Vision Pro stands as a testament to the relentless pursuit of immersive technology. Exploring these concepts and engaging in DIY projects using open source materials can empower enthusiasts and developers to contribute to the ever-growing field of spatial computing. The future promises boundless possibilities, and with the right tools, anyone can be a part of this transformative journey.

Definition of Spatial Computing

Spatial Computing refers to the integration of physical space and digital information, allowing for a more natural and immersive interaction between humans and computers. It leverages technologies to understand, interpret, and respond to the spatial dimensions of the real world.

It marks a paradigm shift in human-computer interaction, enriching our digital experiences by bridging the gap between the physical and virtual worlds. Through advanced technologies like depth-sensing cameras, LiDAR sensors, and powerful processors, spatial computing enables a new era of natural, context-aware, and immersive interactions. As this field continues to evolve, we can anticipate even more innovative applications that seamlessly integrate digital content into our everyday lives.

Key Components of Spatial Computing:

a. Depth-sensing Cameras:

Depth-sensing cameras capture detailed information about the physical environment, creating a three-dimensional representation of space. This is crucial for accurate spatial mapping.

b. LiDAR Sensors:

LiDAR (Light Detection and Ranging) sensors use laser light to measure distances, providing precise depth information. They are commonly used for creating detailed spatial maps in real-time.

c. Advanced Processors:

Powerful processors handle complex computations, enabling real-time rendering, spatial mapping, and interaction. These processors are essential for delivering seamless and responsive spatial computing experiences.

3. Spatial Mapping and Understanding:

a. Environment Mapping

Spatial computing involves mapping the physical environment, creating a virtual representation that aligns with the real world. This mapping is dynamic and continuously updated as the user moves through space.

b. Simultaneous Localization and Mapping (SLAM):

SLAM algorithms play a pivotal role in spatial computing. They allow devices to simultaneously map their surroundings and locate themselves within that mapped space, ensuring accurate positioning.

c. Object Recognition:

Spatial computing systems recognize and understand objects in the physical space, enabling digital content to interact realistically with the environment. This recognition is often facilitated by image processing and machine learning techniques.

4. Spatial Interaction:

a. Gesture Recognition:

Spatial computing systems often incorporate gesture recognition, allowing users to interact with digital content through hand movements, gestures, or other physical actions.

b. Touch and Haptic Feedback:

Devices may provide touch-sensitive surfaces or haptic feedback to simulate the sense of touch in the virtual space, enhancing the user's overall experience.

5. Augmented Reality (AR) and Virtual Reality (VR) in Spatial Computing:

a. AR Integration:

Spatial computing is fundamental to AR, as it involves overlaying digital content onto the real-world environment. Accurate spatial understanding ensures that AR elements interact seamlessly with the physical surroundings.

b. VR Spatial Immersion :

In VR, spatial computing creates a dynamic, immersive environment by understanding and responding to the user's movements within the virtual space. This enhances the sense of presence and realism.

6. Applications of Spatial Computing:

Spatial computing finds applications in navigation, gaming, design, healthcare, education, and more. From creating interactive digital experiences to improving real-world efficiency, spatial computing is reshaping how we interact with technology.

Comments

Post a Comment